Statistical analysis of a survey should be done before data collection

Introduction

I’ve seen things

- I’ve been on the General Human Research Ethics Committee and Animal Research Ethics Committee for over 4 years

- I’ve looked at over 1000 ethics applications

- I’ve consulted on medical clinical trials and Health Science ethics processes

- I’ve done statistical consultation on over 200 projects

- I’ve seen the good, the bad, and the ugly

There is a particular issue in ethics circles that is buzzing right now that I would like to quickly talk about: Controlling the probability of Type 1 and Type 2 statistical errors

Example questionnaire

The usual pattern

- I generated some survey questions

- I obtained a bunch of responses

- I did some common analyses

- t-tests,

- correlations,

- regression

- The results appear adequate for interpretation

The questions

Raw responses

Summary of the responses

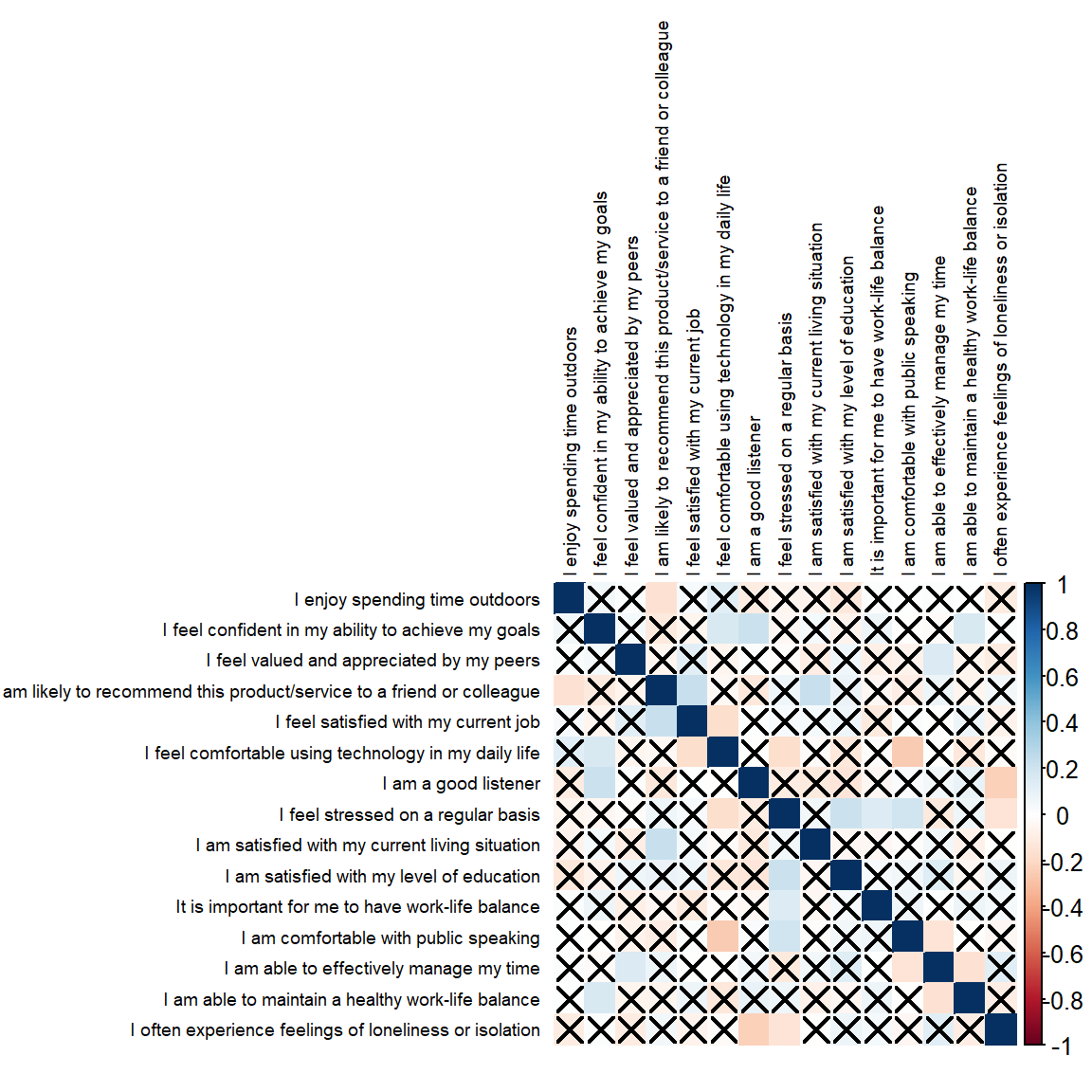

Slice of Correlations

Reliability

- A reliability analysis of the survey produces a Cronbach alpha value of 0.61

- The ideal range is 0.7 to 0.95

- The omega h value is the same, as is omega total

- These values are a little bit low, but I’ve seen many surveys with lower values

- G6 value is 0.89

- Exploratory factor analysis gives strong factors to dig into

Regression

- A regression analysis of qualification level on the other questions yields an R squared value of 0.74, implying that 74% of the variation in qualification level can be explained by the variation in the explanatory variables

- However, looking at the p-values reveals that no individual variable is significant, which should be throwing up big red flags

So what’s the problem?

The results seem good, useful, and interesting; but they are an illusion.

- The data is randomly generated by myself in R

- I selected response distributions that I typically observe, except for one twist:

- The responses in my data have ZERO correlation

- Every significant correlation is a false positive

- The true reliability is zero, all deductions and inferences are invalid

- There are no relationships in this data between anything!

- The questions come from ChatGPT

- I just asked it for random survey questions until I had enough

How is that possible?

The assumptions are violated

Statistical analysis is always based on assumptions

- Even the most robust non-parametric tests assume that:

- Your sample is representative of the population

- Your observations are conditionally independent after controlling for key factors

- You are doing a maximum of 1 statistical hypothesis test on a data set ever

- So 1 research question, 1 correlation or 1 regression or 1 t-test for an entire survey

- Any more and you run into the multiple testing problem, which requires adjustments

Types of errors

Statistical hypothesis testing formally defines two commonly occurring errors:

- A Type 1 error is a false positive

- The survey I made up shows this clearly

- The textbook solution is to adjust the testing procedure so that the probability of a false positive is kept below a chosen significance level across the study

- A Type 2 error is a false negative

- Common when the sample size is too small

- A prospective power analysis can prevent this

- Can also be caused by inaccurate measurement

- Not enough levels on a scale (7+ recommended)

- Questions not in simple yet clear language that participants understand

- Common when the sample size is too small

How to fix it

Better planning

The solution is not to abandon statistics. The statistical analysis, if done right, can go a long way in helping us to talk less nonsense.

- We need shorter, more focused questionnaires

- We need to put thought into what we want to know, from who, and how to extract it reliably

- We need to plan the full analysis in detail before collecting data

- In medical trials the full analysis is often planned out to the finest detail, including power analysis, a year before the first participant is recruited

The best surveys

The best surveys in the world have 3 questions:

- Informed consent

- Rate your perception of X on a scale of 0 to 10

- Explain your rating

- The absolute best surveys in the world have 0 questions, where the responses to the above are implied by directly observed behaviour following randomised assignment

- A/B testing is a form of survey and can produce very definitive results if the research question can be formulated in a suitable way

Let’s be practical

- Obviously we can’t do a whole new survey every time we want to ask a slightly different question

- It is far more convenient to us to go out once and get responses about everything we are interested in

But if we don’t have a sound statistical analysis plan in place in advance then we are wasting peoples’ time because we are not getting real information out of them

Data is not the same as information and information is what we need because that can be turned into knowledge

Sample size / power analysis

- There are good sample size calculators and bad sample size calculators

- If your sample size calculator asks for the population size then it is a bad one and you need to throw it away immediately

- The population size is not a term in any standard statistical test or approach and is almost always irrelevant

- A good sample size calculator will ask you exactly what numbers you expect to see in your responses and how much you expect them to vary

- It must also consider how many tests you will end up actually doing on the same data

Connecting the dots

People avoid doing prospective power analysis, even though it is a critical step in scientific study, because it seems daunting to ask what results you expect to see in terms of numbers while drawing up a questionnaire about human perceptions of human endeavours.

The solution is simple though: take time when setting up the questionnaire to formally connect every question to its research objective

- You will have to do this anyway at some point

- It’s better to do it before sending out the questionnaire because then you can remove questions that are not relevant and stop wasting participants’ time

- Remember that every unnecessary question you ask reduces the quality of your feedback by inducing fatigue or frustration in respondents

- e.g. if you make a question I don’t want to answer compulsory that does not induce me to ponder longer and overcome my reluctance, instead it induces me to close the survey window (biasing your results)

- Remember that every unnecessary question you ask reduces the quality of your feedback by inducing fatigue or frustration in respondents

Details

Suppose you want to know whether there is a difference on average between men and women in terms of their propensity to resign in the face of being denied promotion repeatedly

- You first decide on how to measure it. Suppose we use a 7 step Likert scale question.

- Then you ask yourself what responses you expect to see on this scale and how much you expect those responses to vary from person to person

- Perhaps we expect mostly “Slightly agree” from women and “Neutral” from men, but with two thirds of women varying between “Slightly disagree” and “Strongly Agree” and two thirds of men varying between “Disagree” and “Agree”

- Then we can use a t-test power calculation to approximate how many independent people we need to approach in order to be say 80% sure of detecting a difference at a 5% significance level

Power curve

Multiple items

- The power curve is for a single item

- With multiple items additional considerations come up:

- Do you want any of them to be significant?

- Do you want all of them to be significant?

- Are the items correlated?

- Are you adjusting for that in your testing?

Interviews or observations or explorations

The same principles apply (but with less maths)

- Fancy statistics is (obviously) not compulsory for good science

- My favourite studies to work on are the ones where the results are so obvious that anybody can see them

- Or where the logic is so clear that it doesn’t need substantiation

- But good planning is compulsory for good science

- Every interview question must connect to the research objectives

- With a clear analysis plan

- And forethought as to what responses are expected, versus what responses will constitute ‘unexpected results’

- Every interview question must connect to the research objectives

What if I’m just exploring

- Exploratory analysis and hypothesis testing are partially contradictory

- There is nothing wrong with using p-values as a sorting tool, to start your exploration where you see the smallest p-values

- But when doing explorations it is dangerous to compare a p-value to a significance level

- Instead you can use intervals to quantify uncertainty

Intervals convey much more useful information than p-values anyway

Conclusion

The end

Thank you for your time and attention.

You can read more about power analysis on Wikipedia or my website.

You can also engage the UFS Statistical Consultation Unit for advice or assistance.

.

This presentation was created using the Reveal.js format in Quarto, using the RStudio IDE. Font and line colours according to UFS branding, and background image by Bing AI.

2023/06/22 - Survey analysis